I recently read a blog post that made the following statement:

“”The candidates we are interviewing all have senior titles and 8+ years of experience, yet a number of them have struggled to find the max value in an array, and one couldn’t even start because of the inability to use a for-loop. After seeing this, I requested we move the programming exercises to the start, because all else becomes irrelevant if they fail there. Moving them has helped us reject candidates earlier when it becomes clear they haven’t passed through our filter. All of our candidates had great looking resumes, fancy titles, and can easily recite their job history with a smile, but put them in front of an IDE and all pretense quickly falls away.”

I know and have a lot of respect for the author but in this case I could not disagree more with the conclusions they have reached.

Let me explain:

One well known take on programming is that there are two big problems in writing code: naming and premature optimization. This is about the latter: Premature Optimization

Unfortunately this (very common) approach to coding in interviews is missing the value that senior developers add. Lets me start with this: despite 40+ years of programming experience I still can’t do these exercises in what is essentially performance art in an interview. I am an introvert with imposter syndrome so putting me on the spot to prove my knowledge is a disaster. I can’t think clearly at that point.

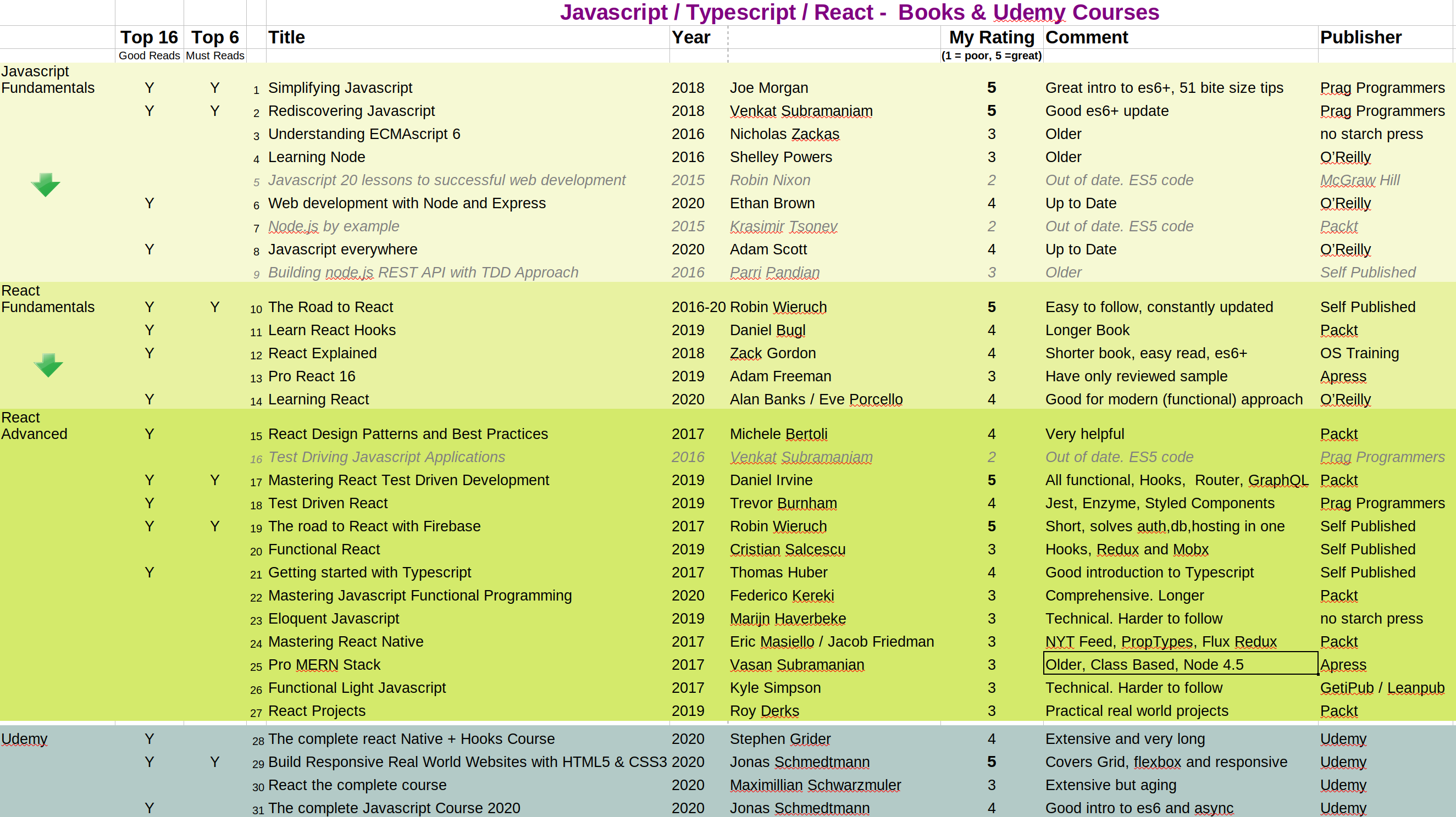

Ironically I managed to get fairly good at the abstract programming exercises about 20 years ago when I temporarily accepted the (false) premise that performance and efficiency is everything in hiring a programmer. In reality I learned over time that these are not the problems that I face in the companies I am hired to work in. When I want to sort items 99.99% of the time .sort() is what I will need because it was optimized in the languages I use about 20-30 years ago.

I assumed these out-of-date exercises would soon be replaced. I was very wrong. “Data structures and algorithms testing’ has become more common since then as a (very poor) substitute for evaluating a programmer.

Today I detest these exercises because want I crave is a conversation about the problems that the company is experiencing in its codebase (there are always problems!). Unfortunately, similar to dating, most interviewers don’t want to admit to or talk about the problems. Only very senior devs get this.

So instead of a mature conversation about problems we are left with abstract problems that have already been solved. Fail.

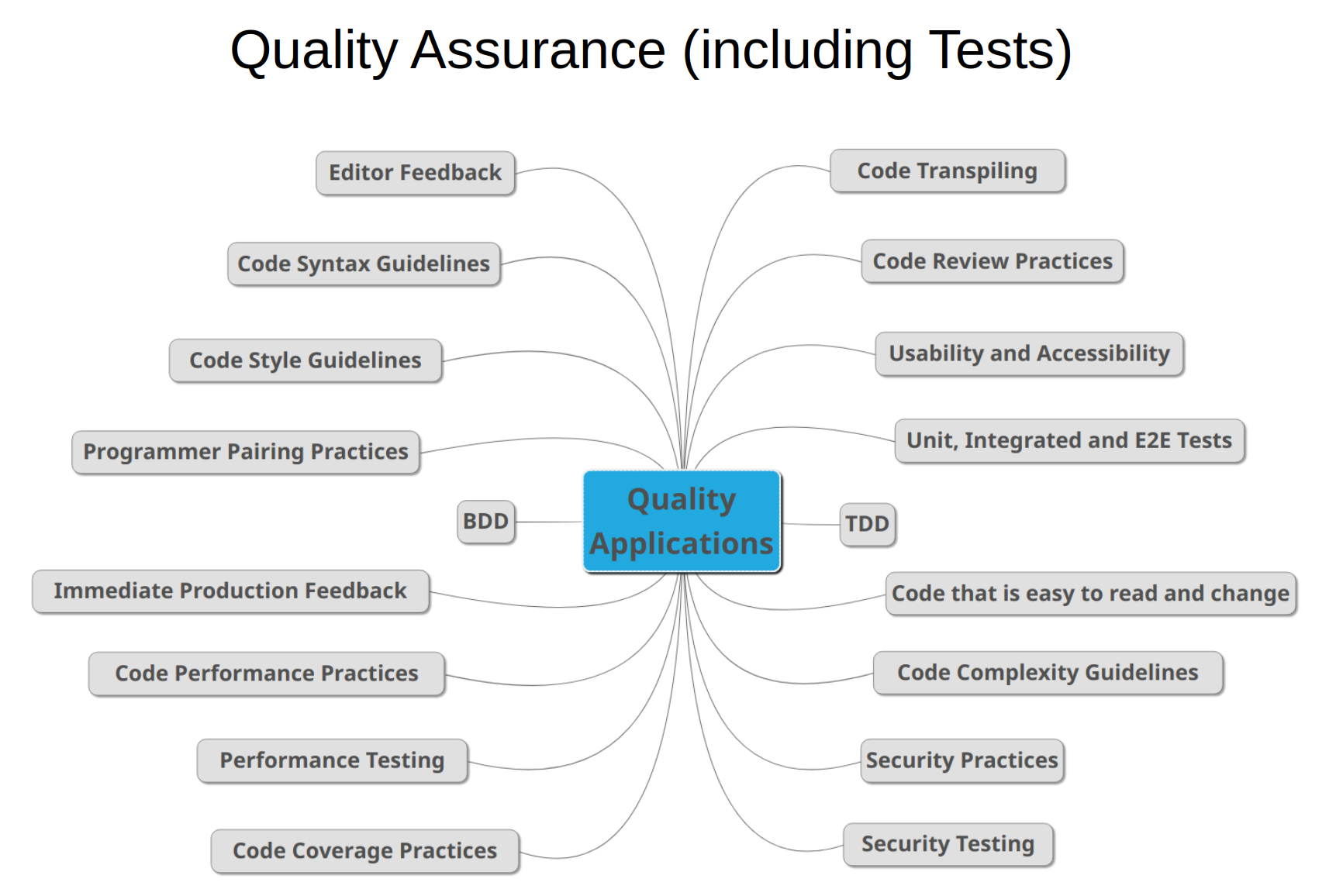

I also noticed that the codebases in EVERY company I have worked in (other than my own recent ones) were a huge mess. Low unit testing. Complex code that only one person could maintain. Massive classes and methods. No documentation in the form of tests. No infrastructure in the form of IAC. What do the programmers that work in these companies know how to do based on the ‘bigO hiring process’? Produce memory and time efficient (bigO) code? What problem is that solving in their actual job? Most developers are using a framework, for example React and the problems they face and needs to solve are almost universally NOT about BigO.

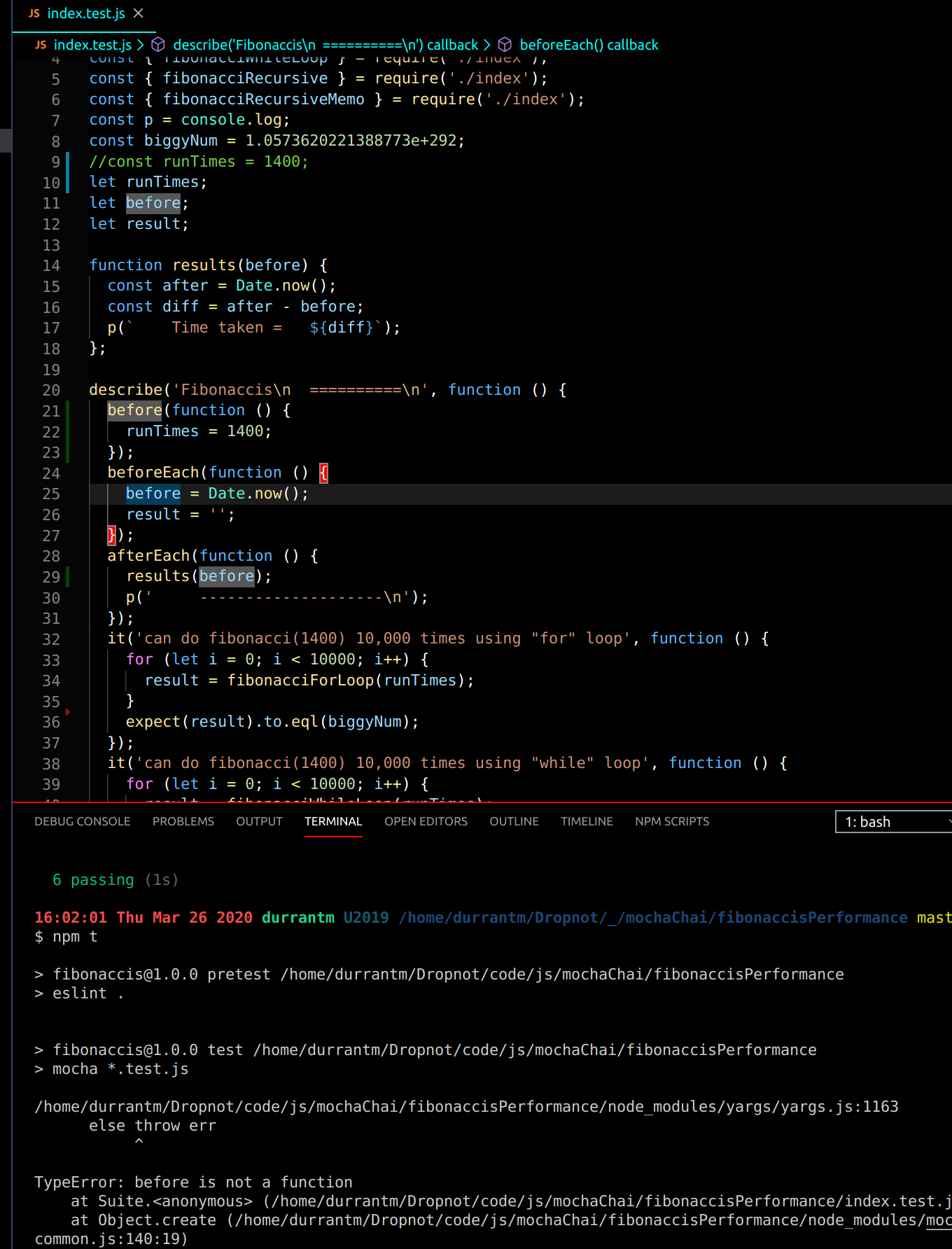

Instead of testing for abstract BigO implementations of sorting numbers how about talking about unit testing first? How about talking about the use of English in naming stuff – another MASSIVE problem that isn’t covered in interviews but on Day 1 of the job the horrors in the codebase for naming are revealed.

What about modularity? What about the philosophy of breaking stuff down. What is the state of this in the company’s ACTUAL codebase?

What about linting? This is one of the biggest quality items for me. I favor a massive linting list of rules for consistency and feedback and could spend several hours in interviews just talking about linting. Do you talk about linting in interviews?

Finally I am neuro-diverse and find trying to do any of the ‘i challenge you to write code in front of me and then respond to my challenges about performance’ in interviews to be too stressful to think clearly as I am in flight or fight mode and higher thinking powers have shut down. This is different from the pair-programming collaboration that I favor and lvoe doing with fellow programming enthusiasts.

I beg you and all who read this:

Stop with the bigO abstract performance acting way of interviewing people. Your codebase will thank you.